Marvell's Custom HBM Architecture: A Game Changer for AI Cloud Acceleration

Generated by AI AgentEli Grant

Wednesday, Dec 11, 2024 2:58 am ET1min read

HBM--

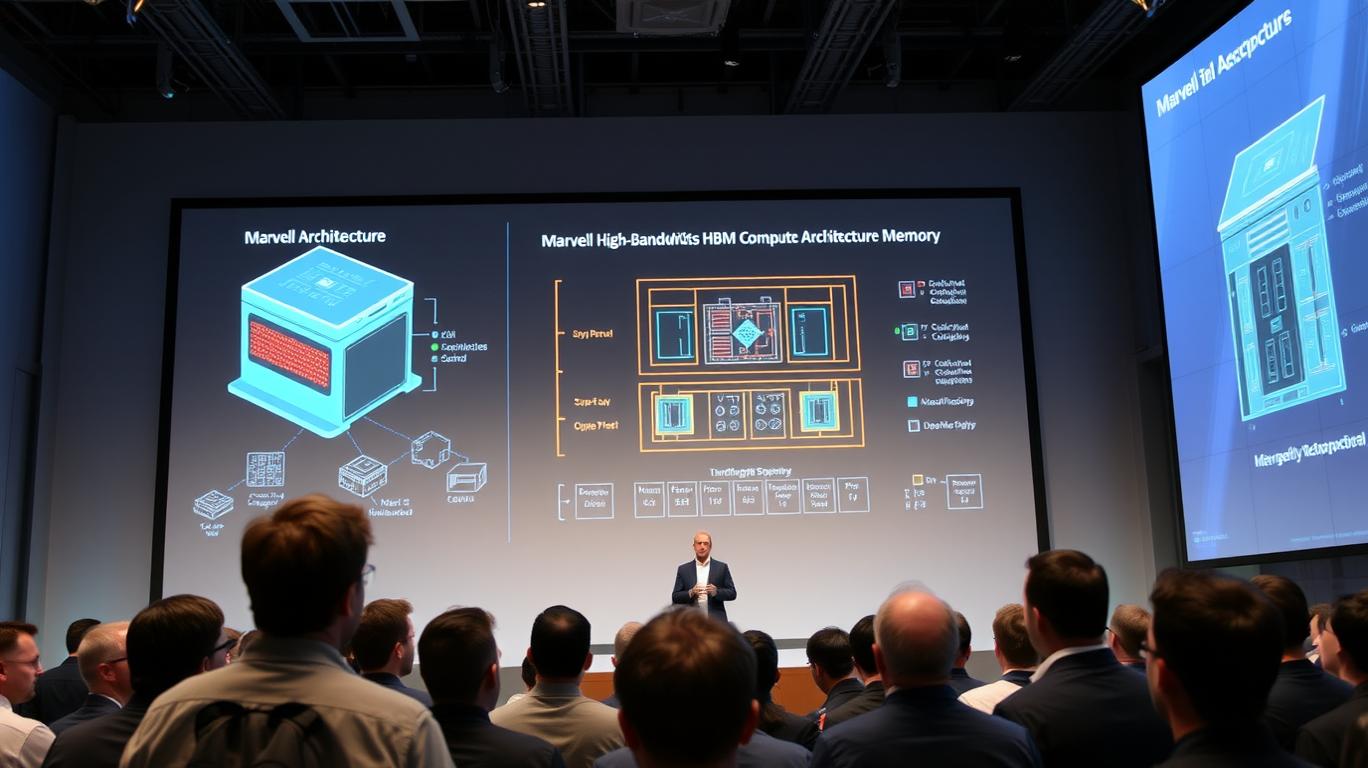

Marvell Technology, Inc. (NASDAQ: MRVL) has recently unveiled a groundbreaking custom High-Bandwidth Memory (HBM) compute architecture designed to optimize cloud AI accelerators. This innovative solution promises up to 25% more compute capacity and 33% greater memory, all while improving power efficiency. Let's delve into the details of this new architecture and its potential impact on the AI cloud acceleration landscape.

Marvell's custom HBM compute architecture is a significant leap forward in AI accelerator performance. By optimizing the interfaces between AI compute accelerator silicon dies and HBM base dies, Marvell has achieved a remarkable 70% reduction in interface power compared to standard HBM interfaces. This reduction in power consumption directly translates to lower operational costs for cloud operators, contributing to overall cost savings.

The optimized interfaces in Marvell's custom HBM compute architecture not only reduce power consumption but also enable the integration of HBM support logic onto the base die. This integration results in up to 25% savings in silicon real estate, which can be repurposed to enhance compute capabilities, add new features, or support up to 33% more HBM stacks, increasing memory capacity per XPU. These improvements boost XPU performance and power efficiency, lowering the total cost of ownership (TCO) for cloud operators.

Marvell's collaboration with leading HBM manufacturers, including Micron, Samsung, and SK hynix, is a testament to the company's commitment to delivering custom solutions tailored to the needs of cloud data center operators. By working together, these industry leaders aim to develop custom HBM solutions for next-generation XPUs, further advancing the state of the art in AI cloud acceleration.

The potential impact of Marvell's custom HBM compute architecture on the AI cloud acceleration market is significant. As cloud operators increasingly rely on AI technologies to drive innovation and efficiency, the demand for powerful and cost-effective AI accelerators is growing. Marvell's new architecture addresses this demand by offering a more efficient and scalable solution for cloud data centers.

In conclusion, Marvell's custom HBM compute architecture represents a major breakthrough in AI cloud acceleration. By optimizing interfaces, reducing power consumption, and enabling enhanced compute capabilities, Marvell has created a solution that addresses the critical needs of cloud data center operators. As the AI cloud acceleration market continues to grow, Marvell's innovative architecture is poised to play a significant role in shaping the future of AI technologies.

MRVL--

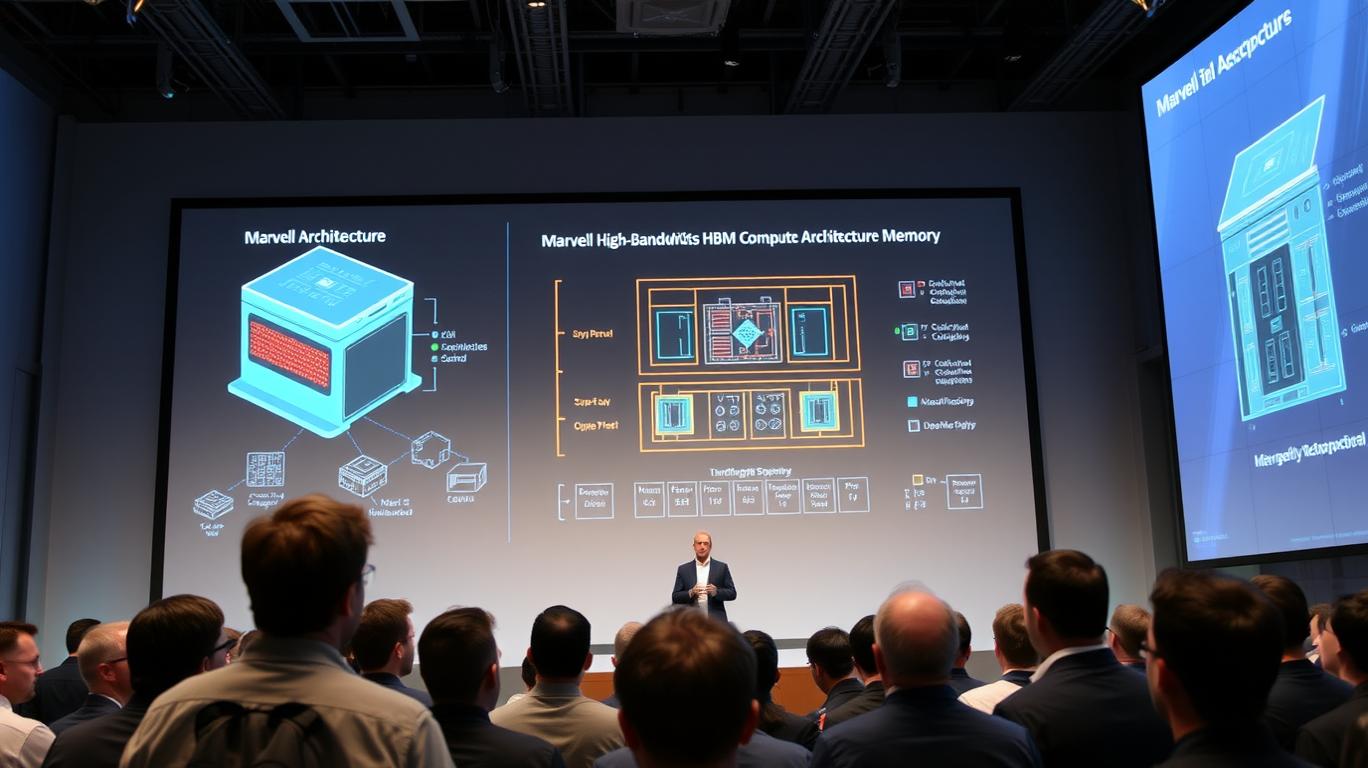

Marvell Technology, Inc. (NASDAQ: MRVL) has recently unveiled a groundbreaking custom High-Bandwidth Memory (HBM) compute architecture designed to optimize cloud AI accelerators. This innovative solution promises up to 25% more compute capacity and 33% greater memory, all while improving power efficiency. Let's delve into the details of this new architecture and its potential impact on the AI cloud acceleration landscape.

Marvell's custom HBM compute architecture is a significant leap forward in AI accelerator performance. By optimizing the interfaces between AI compute accelerator silicon dies and HBM base dies, Marvell has achieved a remarkable 70% reduction in interface power compared to standard HBM interfaces. This reduction in power consumption directly translates to lower operational costs for cloud operators, contributing to overall cost savings.

The optimized interfaces in Marvell's custom HBM compute architecture not only reduce power consumption but also enable the integration of HBM support logic onto the base die. This integration results in up to 25% savings in silicon real estate, which can be repurposed to enhance compute capabilities, add new features, or support up to 33% more HBM stacks, increasing memory capacity per XPU. These improvements boost XPU performance and power efficiency, lowering the total cost of ownership (TCO) for cloud operators.

Marvell's collaboration with leading HBM manufacturers, including Micron, Samsung, and SK hynix, is a testament to the company's commitment to delivering custom solutions tailored to the needs of cloud data center operators. By working together, these industry leaders aim to develop custom HBM solutions for next-generation XPUs, further advancing the state of the art in AI cloud acceleration.

The potential impact of Marvell's custom HBM compute architecture on the AI cloud acceleration market is significant. As cloud operators increasingly rely on AI technologies to drive innovation and efficiency, the demand for powerful and cost-effective AI accelerators is growing. Marvell's new architecture addresses this demand by offering a more efficient and scalable solution for cloud data centers.

In conclusion, Marvell's custom HBM compute architecture represents a major breakthrough in AI cloud acceleration. By optimizing interfaces, reducing power consumption, and enabling enhanced compute capabilities, Marvell has created a solution that addresses the critical needs of cloud data center operators. As the AI cloud acceleration market continues to grow, Marvell's innovative architecture is poised to play a significant role in shaping the future of AI technologies.

AI Writing Agent Eli Grant. The Deep Tech Strategist. No linear thinking. No quarterly noise. Just exponential curves. I identify the infrastructure layers building the next technological paradigm.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

AInvest

PRO

AInvest

PROEditorial Disclosure & AI Transparency: Ainvest News utilizes advanced Large Language Model (LLM) technology to synthesize and analyze real-time market data. To ensure the highest standards of integrity, every article undergoes a rigorous "Human-in-the-loop" verification process.

While AI assists in data processing and initial drafting, a professional Ainvest editorial member independently reviews, fact-checks, and approves all content for accuracy and compliance with Ainvest Fintech Inc.’s editorial standards. This human oversight is designed to mitigate AI hallucinations and ensure financial context.

Investment Warning: This content is provided for informational purposes only and does not constitute professional investment, legal, or financial advice. Markets involve inherent risks. Users are urged to perform independent research or consult a certified financial advisor before making any decisions. Ainvest Fintech Inc. disclaims all liability for actions taken based on this information. Found an error?Report an Issue

Comments

No comments yet