The Rise and Reckoning of AI-Driven EdTech Investments

The Dual Edge of AI Integration: Growth and Risks

While AI-driven EdTech promises to democratize education and enhance efficiency, its rapid adoption has exposed critical vulnerabilities. A 2025 study reveals that 50% of students feel less connected to teachers due to AI integration, and 70% of educators express concerns about weakened critical thinking and research skills among learners. These findings underscore a growing tension between technological efficiency and the irreplaceable human elements of education. Additionally, the lack of institutional policies governing generative AI tools-less than 10% of schools had formal guidelines by 2024-has created a regulatory vacuum, enabling ethical risks such as academic cheating and data privacy breaches. Cybersecurity threats further compound these challenges, with 63% of educational technology specialists citing heightened concerns over AI-related cyberattacks.

The market is also grappling with structural risks. Major tech companies offering free, built-in AI solutions are eroding the business models of EdTech startups, while algorithmic biases in AI systems risk perpetuating educational inequities. For instance, AI-driven platforms may inadvertently encode cultural or socioeconomic biases, disadvantaging marginalized student populations. These systemic issues demand a reevaluation of how AI is designed, deployed, and governed in educational contexts.

Anthropology and Interdisciplinary Approaches: A Path to Sustainable Innovation

Anthropology and interdisciplinary frameworks offer a critical lens to address these challenges. By decoding cultural patterns and human behaviors, anthropological insights help bridge the human-technology gap, ensuring AI tools align with diverse educational ecosystems. Ethnographic methods, such as immersive research and cultural analysis, are increasingly used to design systems that foster user engagement while mitigating risks like overreliance on AI. For example, the Alaska Department of Education's AI framework which integrates human rights principles (e.g., equitable education and privacy), exemplifies how anthropological approaches can shape ethical AI adoption.

Interdisciplinary collaboration also emphasizes "critical AI literacy," enabling educators and learners to interrogate the societal implications of AI systems. This approach is vital in higher education, where AI is being leveraged for curriculum development and research, but where concerns about academic integrity and data ethics persist. By embedding anthropological perspectives into AI design, EdTech firms can create culturally responsive tools that prioritize inclusivity and ethical governance.

Regulatory and Ethical Frameworks: Navigating the 2025 Landscape

The regulatory landscape for AI in education is evolving rapidly. UNESCO's AI competency frameworks and the EU AI Act emphasize principles such as transparency, accountability, and human-centric design, setting global benchmarks for ethical AI deployment. At the national level, initiatives like Alaska's AI Framework highlight the importance of balancing innovation with safeguards, such as requiring proper attribution of AI-generated content and ensuring generative AI augments rather than replaces human creativity.

However, regulatory compliance remains a double-edged sword. While frameworks like the EU AI Act and U.S. data privacy laws provide clarity, they also increase operational costs for startups, particularly those lacking the resources to navigate complex compliance requirements. For instance, adherence to global standards such as WCAG (Web Content Accessibility Guidelines) and LTI (Learning Tools Interoperability) is critical for market access but demands significant technical and financial investment.

Case Studies: Anthropology-Driven Innovations and Market Sustainability

Concrete examples illustrate how anthropology-driven AI EdTech innovations are reshaping market sustainability. At Stanford and the University of Toronto, AI regulatory sandboxes have been employed to test generative AI tools in controlled environments, balancing innovation with ethical oversight. These sandboxes, which incorporate multidisciplinary expertise, demonstrate how structured experimentation can mitigate risks while fostering responsible AI adoption.

Another compelling case is the development of AI-driven fire predictive models in K-12 education, which use anthropological insights to teach students about environmental sustainability through scenario-based learning. By integrating AI with anthropogenic environmental data, these tools not only enhance decision-making skills but also align with UNESCO's Education for Sustainable Development goals. Such innovations highlight the potential for AI to address global challenges while adhering to ethical and cultural norms.

Weighing Future Demand Against Risks

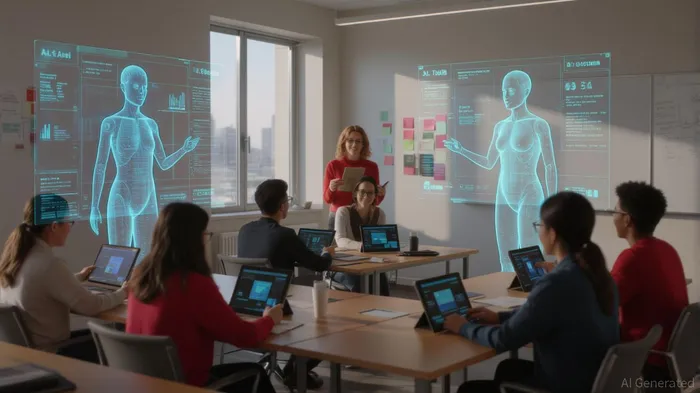

Despite the risks, the demand for AI-driven EdTech is poised to grow, driven by advancements in VR, AR, and gamified learning, which are projected to push the global EdTech market to USD 445.94 billion by 2029. However, investors must remain vigilant. The Asia-Pacific region's 48% CAGR underscores the importance of culturally adaptive AI solutions, while North America's dominance in revenue share highlights the need for robust regulatory alignment.

The key to long-term sustainability lies in harmonizing technological innovation with ethical and anthropological considerations. Startups that prioritize human-centric design, interdisciplinary collaboration, and compliance with emerging regulations will be better positioned to navigate the evolving landscape. Conversely, firms that overlook cultural adaptability or ethical governance risk reputational damage and regulatory penalties.

Conclusion

The AI-driven EdTech market is at a crossroads. While its growth trajectory is undeniable, the sector's long-term viability hinges on addressing systemic risks through anthropology-informed innovation and robust regulatory frameworks. Investors must prioritize firms that embed ethical principles, cultural responsiveness, and interdisciplinary expertise into their AI strategies. As the market matures, those who navigate the "reckoning" of AI's ethical and societal implications will define the next era of educational technology.

Blending traditional trading wisdom with cutting-edge cryptocurrency insights.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet