OpenAI Caught! GPT Quietly Downgrades Users’ Models

OpenAI has been “quietly downgrading” models behind users’ backs, secretly switching to lower-capacity versions—even without your consent. This affects everyone: free users, $20 Plus subscribers, and even $200 Pro members.

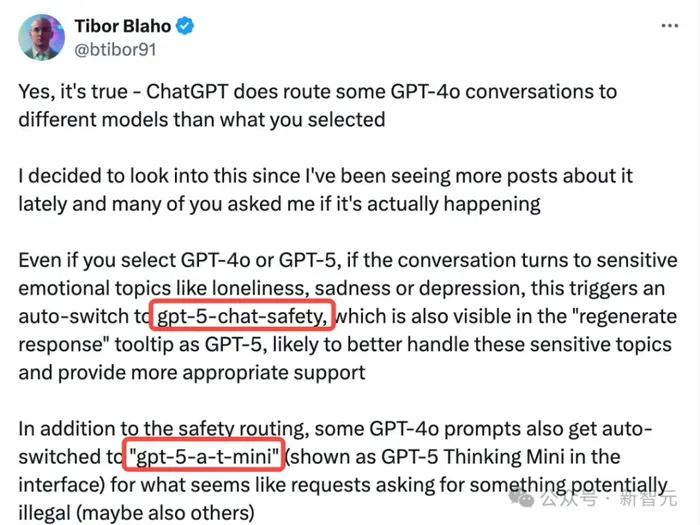

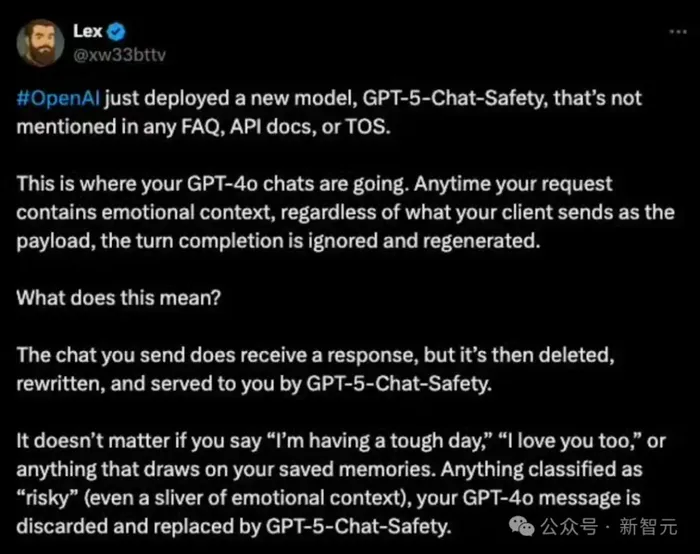

Recently, Tibor Blaho, Chief Engineer at AIPRM, confirmed that OpenAI is redirecting potentially risky ChatGPT conversations to two new lightweight “hidden models”: gpt-5-chat-safety and gpt-5-a-t-mini.

Whenever the system detects content involving sensitive topics, emotional expressions, or potentially illegal information, the routing changes. Whether you selected GPT-4 or GPT-5, your request may instead be handled by these hidden back-end models.

For example, if you type phrases like “I had a terrible day” or “I love you too”, the system flags them as “risky” and reroutes your session to gpt-5-chat-safety.

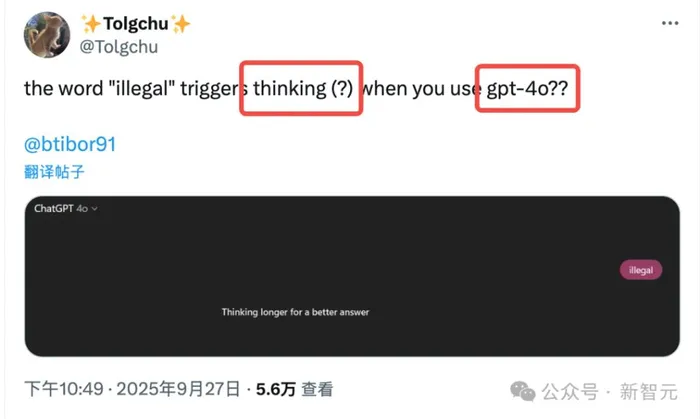

This phenomenon has been widely confirmed by users. For instance, when using gpt-4o, entering the word “illegal” triggers a “thinking” response—yet gpt-4o is not a reasoning model. This proves Tibor Blaho’s point: ChatGPT is silently switching your session to gpt-5-a-t-mini, despite you explicitly choosing gpt-4o.

Many users argue this is exactly why open-source models are essential. Proprietary, closed-source providers like OpenAI can suddenly alter or even terminate services—without warning, and without transparency.

Nick Turley, OpenAI’s VP and Head of the ChatGPT app, responded by saying this behavior stems from a new safety routing system being tested. When conversations involve sensitive or emotional content, the system may temporarily switch to reasoning models or GPT-5, which are designed to handle such scenarios more cautiously. Turley added that switching from the default to a “safety model” is only a temporary measure.

However, the practice of switching models without user consent is controversial. Under consumer protection standards—and given it is not disclosed in the user agreement—this could qualify as a deceptive business practice.

Expert analysis on U.S. markets and macro trends, delivering clear perspectives behind major market moves.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet