Nvidia's Volatility in Light of Broadcom's Earnings: A New Dynamic in the AI Chip Ecosystem?

The AI semiconductor sector is witnessing a seismic shift as two titans—Nvidia and Broadcom—vie for dominance. While Nvidia's Blackwell platform has long been the gold standard for AI training, Broadcom's aggressive expansion into custom ASICs and hyperscaler partnerships is reshaping the competitive landscape. This article examines how Broadcom's Q2 2025 earnings and strategic moves are challenging Nvidia's leadership, and what this means for investors navigating the AI revolution.

The Nvidia-Broadcom Rivalry: A Tale of Two Strategies

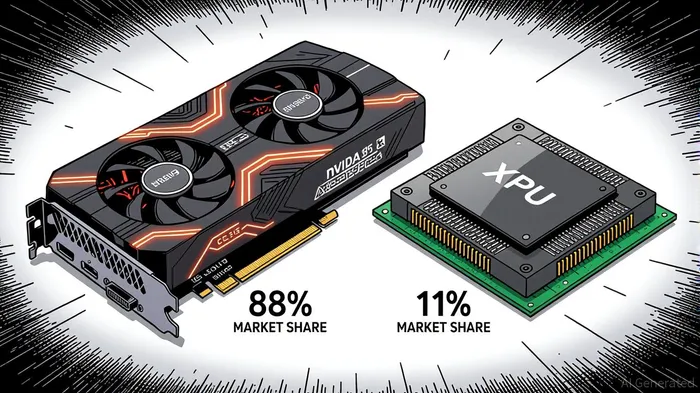

Nvidia's Q2 2025 results were nothing short of staggering. With $46.7 billion in revenue, the company's Data Center segment accounted for 88% of total sales, driven by the Blackwell B200 GPU. This chip, capable of 30x inference performance over its predecessor, has cemented Nvidia's dominance in AI training workloads. Its 72.7% non-GAAP gross margin and $8.68 billion in R&D spending (18.5% of revenue) underscore its technological moat. However, this success comes with risks: 80–90% of its AI accelerator market share is concentrated in a single product line, and U.S. export restrictions have slashed H20 sales to China-based customers.

Broadcom, meanwhile, is taking a different path. Its Q2 2025 AI revenue hit $4.4 billion (46% YoY growth), with a $10 billion order from OpenAI (rumored to be for custom XPUs) signaling a strategic breakthrough. The company's custom ASICs offer 75% lower costs and 50% lower power consumption than Nvidia's GPUs, making them ideal for hyperscalers like MetaMETA-- and GoogleGOOGL--. Broadcom's Tomahawk 6 switch, with 102.4 Tbps throughput, further strengthens its position in distributed AI workloads. At 67% of revenue, its adjusted EBITDA margin outpaces Nvidia's 32.9% operating cash flow margin, highlighting its operational efficiency.

Strategic Industry Leadership: Training vs. Inference

Nvidia's strength lies in its CUDA ecosystem and partnerships with AI model developers, which lock in demand for its training chips. The Blackwell B200's 1,000W+ power consumption and reliance on liquid cooling, however, create operational complexity—a stark contrast to Broadcom's energy-efficient XPUs. While NvidiaNVDA-- dominates the $92 billion AI chip market in 2025, BroadcomAVGO-- is carving out a niche in inference and hyperscaler deployments.

Broadcom's Scale-Up Ethernet (SUE) initiative is another wildcard. By promoting open Ethernet standards, it challenges Nvidia's NVLink technology, which ties customers to proprietary ecosystems. This move aligns with hyperscalers' growing desire to avoid vendor lock-in, a trend that could accelerate as AI infrastructure spending reaches $150–400 billion by 2030.

Competitive Positioning: Risks and Opportunities

For Nvidia, the risks are twofold: geopolitical exposure and product concentration. The absence of H20 sales to China in Q2 2025 highlights the fragility of its largest market. Additionally, production delays for the Blackwell B200 and reliance on the underpowered B200A variant have spooked investors. Yet, its CUDA ecosystem and NIM inference framework provide a durable advantage in training workloads, where performance trumps cost.

Broadcom's path is not without pitfalls. Its XPUs lack the software maturity of Nvidia's CUDA, and its reliance on hyperscaler contracts (e.g., OpenAI, Meta) exposes it to client-specific risks. However, its VMware integration and expanding hyperscaler partnerships are closing the gapGAP-- in ecosystem capabilities. Analysts project Broadcom's AI revenue could reach $33 billion by 2026, driven by XPUs and Tomahawk Ultra networking chips.

Investment Implications: Diversification in a Bifurcated Market

The AI chip market is bifurcating: Nvidia leads in training, while Broadcom gains traction in inference and hyperscaler infrastructure. For investors, this suggests a diversified approach. Nvidia's stock remains a high-conviction play for those betting on AI training's long-term growth, but its volatility—driven by geopolitical risks and product cycles—demands caution.

Broadcom, on the other hand, offers a compelling alternative for investors seeking exposure to cost-efficient AI infrastructure. Its 11 consecutive quarters of AI revenue growth and strategic partnerships with OpenAI and VMware position it as a key player in the inference segment. However, its market share (11% in 2025) is still dwarfed by Nvidia's 80–90%, and ecosystem maturity remains a hurdle.

Conclusion: A New Dynamic, Not a Zero-Sum Game

The AI semiconductor sector is evolving into a duopoly where Nvidia and Broadcom serve complementary roles. Nvidia's dominance in training workloads and software ecosystems is unlikely to wane soon, but Broadcom's cost-effective, energy-efficient solutions are reshaping the inference and hyperscaler markets. For investors, the key is to balance exposure to both leaders while monitoring risks like geopolitical tensions, supply chain bottlenecks, and ecosystem fragmentation.

As the AI revolution accelerates, the companies that thrive will be those that adapt to the dual imperatives of performance and cost efficiency. In this new dynamic, Nvidia and Broadcom are not just competitors—they are co-architects of the AI infrastructure that will power the next decade of innovation.

Tracking the pulse of global finance, one headline at a time.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet