Google Pushes TPU Aggressively, Challenging Nvidia’s GPU

According to The Information, Google has recently approached small cloud service providers that lease Nvidia chips, discussing the possibility of hosting Google’s artificial intelligence chips in their data centers.

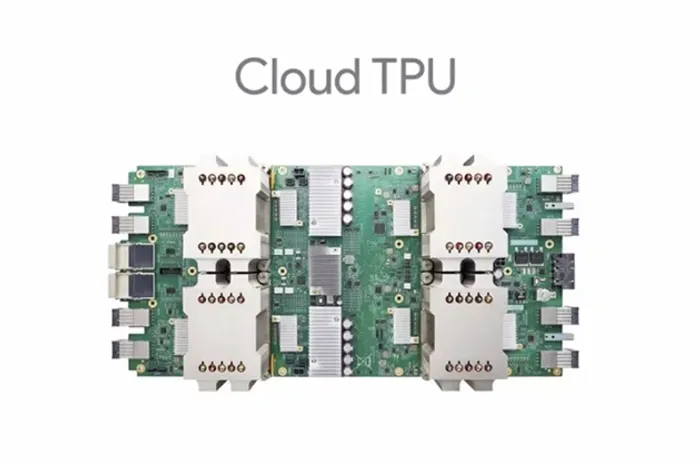

Google has already reached an agreement with at least one cloud provider—London-based Fluidstack—which will host Google’s Tensor Processing Units (TPUs) in its New York data center.

The company has also attempted to strike similar deals with other Nvidia-dependent cloud service providers, including Crusoe, which builds Nvidia-based data centers for OpenAI, and CoreWeave, which leases Nvidia chips to Microsoft while also signing rental agreements with OpenAI.

It is not yet clear why Google has, for the first time, decided to deploy TPU chips to third-party data centers. One reason could be that its own data center construction is lagging behind chip demand. Another possibility is that Google hopes these providers can help expand the TPU customer base—such as AI application developers.

This model is quite similar to the business practice of leasing Nvidia GPUs, putting Google in more direct competition with Nvidia.

Either way, deploying TPUs in other providers’ facilities could reduce reliance on Nvidia GPUs.

Gil Luria, an analyst at investment bank DA Davidson, noted that cloud providers and major AI developers are increasingly interested in using TPUs to reduce dependency on Nvidia. “Nobody likes being tied to a single source when it comes to critical infrastructure,” he said.

Google Woos Nvidia’s Allies to Intensify Competition

Market analysts believe Google is attempting to attract Nvidia’s closest partners—emerging cloud providers that almost exclusively rent Nvidia chips and are more willing than traditional providers to purchase Nvidia’s full range of products. Nvidia has invested in and taken stakes in several of these companies, also supplying them with its most in-demand chips.

Google began developing TPU chips about a decade ago, primarily to support its own AI technologies such as the Gemini large language model. Over time, it has also rented TPUs to external customers.

In June, OpenAI rented Google’s TPUs to power ChatGPT and other products—marking its first large-scale use of non-Nvidia chips. This arrangement helped OpenAI reduce reliance on Microsoft’s data centers while giving Google an opportunity to challenge Nvidia’s dominance in the GPU market. OpenAI also hopes TPUs rented via Google Cloud will help lower inference computing costs.

Beyond OpenAI, Apple, Safe Superintelligence, and Cohere have also rented Google Cloud TPUs, partly because some of their employees previously worked at Google and are familiar with how TPUs function.

Google executives have been discussing strategies to increase TPU-related revenue while reducing dependence on costly Nvidia chips. To win over smaller cloud providers, Google is even willing to offer financial backing: if Fluidstack struggles to cover its New York data center leasing costs, Google will provide up to $3.2 billion in support.

Nvidia CEO Jensen Huang, however, has been dismissive of TPUs, stating that AI application developers prefer GPUs due to their versatility and Nvidia’s powerful software ecosystem supporting them.

Expert analysis on U.S. markets and macro trends, delivering clear perspectives behind major market moves.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet