From Nvidia to Broadcom: AI Trends Shaping

Since the launch of ChatGPT, the world has entered the AI era, and Nvidia has been the uncontested leader in AI hardware, with its market capitalization repeatedly reaching the top of the global rankings.

Despite persistent warnings from analysts about potential competition, Nvidia empire has seemed unassailable. However, recent developments suggest that the competition may be catching up—and this time, the threat is very real.

The Changing Landscape: Broadcom's Emergence as a Competitor

In December, just halfway through the month, Broadcom led the AI chip sector with a 50% surge in stock price, crossing the $1 trillion market cap mark. In contrast, Nvidia has suffered a 15% pullback from its highs, falling into correction territory and slipping to the third position in market capitalization, behind Apple and Microsoft.

This shift signals a potential turning point for the AI chip market, and many are questioning whether Nvidia's long-standing dominance could be in jeopardy. Broadcom, a company traditionally known for its semiconductor products, has introduced a new player in the game—ASICs (Application-Specific Integrated Circuits), which are challenging Nvidia's GPUs (Graphics Processing Units) in the AI space.

The Transition from GPUs to ASICs in AI Computation

For years, GPUs have been the go-to hardware for training AI models due to their unmatched parallel processing power. As AI commercialization has progressed, companies have eagerly competed for the most powerful Nvidia GPUs, willing to pay high prices to secure them. The scarcity of GPUs has made them highly sought-after assets, with companies scrambling to buy them before others.

However, as AI adoption grows, a shift in hardware preference is becoming more apparent. While GPUs have long been synonymous with AI training, the rise of ASIC chips is beginning to challenge this status quo.

Why ASICs?

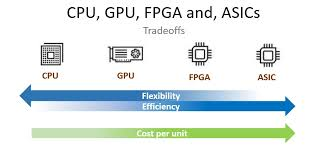

ASICs, unlike standard semiconductors, are designed for specific tasks, providing unparalleled efficiency and performance for targeted operations. While GPUs excel in handling massive parallel computing tasks, they struggle with memory limitations when executing large-scale matrix multiplications. In contrast, ASICs are optimized for single tasks, providing higher speeds, lower energy consumption, and more cost-effective production once mass-produced.

With increasing demand for efficiency in AI computing, ASICs are becoming the preferred option, especially for inference tasks, which involve making predictions using trained models. ASICs are seen as a more scalable, cost-efficient solution for handling the growing workload of AI applications.

Tech Giants are Shifting Towards Custom AI Chips

In light of rising GPU prices and long delivery times, many tech giants are pivoting towards developing their own AI chips, with ASICs leading the charge. Notable companies like Google, Amazon, Microsoft, Meta, and Tesla have already made significant strides with ASIC-based chips.

Google, for instance, released its first-generation Tensor Processing Units (TPUs) in 2015, becoming a pioneer in the ASIC space. Amazon's Tranium, Microsoft's Maia, Meta's MTIA, and Tesla's Dojo are all examples of custom-built AI chips based on the ASIC architecture.

Broadcom is now positioning itself as a key player in this market, providing ASIC chips to various tech giants and continuing to innovate in the space. Recently, Broadcom reported stellar earnings, exceeding expectations, and its stock surged. During the earnings call, Broadcom's CEO made a bold statement, forecasting that the market for custom AI chips, such as ASICs, would reach between $60 billion and $90 billion by 2027—up from just $12 billion today.

Broadcom anticipates that 50% of AI FLOPS (computational power) will come from ASICs, and that all custom chips used by cloud service providers (CSPs) will be ASIC-based in the near future.

Investment Community's Shift Towards ASICs

Broadcom's move into the ASIC space has caught the attention of investment banks, with many now seeing it as a major growth opportunity. Morgan Stanley recently published a report that highlights how ASICs are expected to take more market share from Nvidia's GPUs due to their superior performance and cost-efficiency for specific tasks. The firm also noted that the rise of ASICs does not necessarily spell the end for GPUs, as both technologies are expected to coexist in the market, each serving different needs.

Morgan Stanley predicts that the AI ASIC market will grow from $12 billion in 2024 to $30 billion by 2027, with a compound annual growth rate (CAGR) of 34%.

Additionally, major investment firms like Goldman Sachs have raised their price targets for Broadcom, citing the growing demand for custom chips and Broadcom's successful acquisition of VMware. Barclays and Truist also revised their price targets upwards, signaling confidence in Broadcom's potential in the AI chip market.

Additionally, major investment firms like Goldman Sachs have raised their price targets for Broadcom, citing the growing demand for custom chips and Broadcom's successful acquisition of VMware. Barclays and Truist also revised their price targets upwards, signaling confidence in Broadcom's potential in the AI chip market.

Conclusion: A Paradigm Shift in the AI Chip Market?

While Nvidia's GPUs have dominated the AI training market, the rise of ASICs—especially from companies like Broadcom—indicates that a shift is underway in AI hardware. With ASICs offering superior efficiency, cost-effectiveness, and scalability, many industry experts believe they could eventually surpass GPUs, particularly in the growing AI inference space. As Broadcom continues to expand its ASIC offerings, Nvidia may face increasing competition that could challenge its current market position.

However, it's important to note that GPUs and ASICs are not mutually exclusive; both technologies will likely coexist, serving different needs in the AI ecosystem. The shift towards ASICs represents a diversification of AI hardware, one that could reshape the future of AI development.

Fantastic stocks and where to find them

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet