Edge AI Hardware Spurs GPU Relief, Cuts Cloud Costs by 77%

How Neoclouds Like Theta EdgeCloud Are Helping Solve the GPU Crunch

A growing demand for GPU resources across artificial intelligence, machine learning, and high-performance computing is straining cloud infrastructure, but innovative edge computing platforms are stepping in to address the problem. Companies like Tencent Cloud, with its EdgeOne Pages, and MINISFORUM, through its AMD-powered AI mini workstations, are introducing solutions that reduce dependency on centralized cloud resources. These tools leverage distributed edge nodes, optimized AI frameworks, and compact hardware to deliver efficient, cost-effective alternatives to traditional GPU-heavy cloud setups.

The GPU crunch has become a critical bottleneck for developers, researchers, and enterprises relying on large language models and real-time processing capabilities. Solutions that integrate AI accelerators with edge computing infrastructure are gaining traction, offering scalable performance without the latency and costs of centralized cloud computing. Tencent Cloud's EdgeOne Pages, for instance, now supports a full-stack ecosystem with native compatibility for frameworks like Next.js and SvelteKit, enabling rapid deployment of AI-powered web applications.

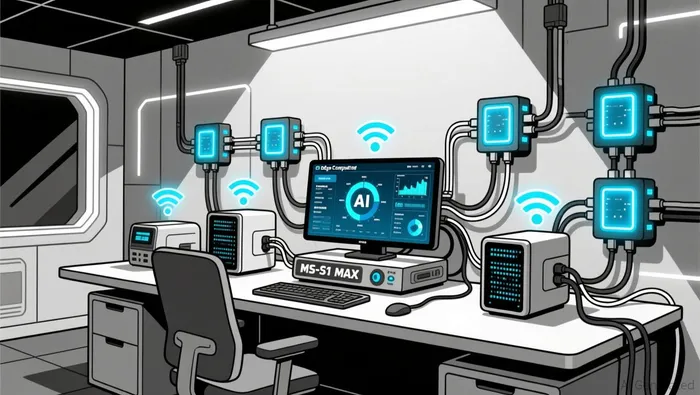

Meanwhile, AMDAMD-- and partners are advancing edge computing capabilities through compact AI-powered workstations and NAS (network-attached storage) devices. The MS-S1 MAX AI mini workstation, powered by AMD's Ryzen AI Max+ 395 CPU and Radeon 8060S GPU, enables local deployment of large language models at a fraction of the size, power consumption, and cost of traditional server solutions. These innovations are reshaping how AI and edge computing are implemented, offering developers and organizations greater flexibility and control over their infrastructure.

Rising Demand for Edge AI and GPU Optimization

As enterprises push for more AI-driven applications, the limitations of traditional cloud infrastructure—such as latency, bandwidth, and cost—have become more pronounced. Edge computing platforms are helping to alleviate these constraints by decentralizing processing power and bringing computation closer to the data source. Tencent Cloud's EdgeOne Pages now supports serverless architecture, eliminating the need for dedicated servers and reducing operational costs while maintaining high-performance delivery across global edge nodes according to Tencent Cloud's official announcement.

The integration of AI development tools into edge platforms is also accelerating adoption. EdgeOne Pages now offers seamless compatibility with CodeBuddy, an AI-powered IDE, and supports Model Context Protocol (MCP), allowing developers to connect to tools like Cursor and VS Code. These integrations streamline the development lifecycle, enabling faster deployment and iteration of AI-driven web applications without the need for large GPU clusters in centralized data centers according to Tencent Cloud's official announcement.

Compact Hardware and High-Efficiency AI Deployments

MINISFORUM and AMD are making similar strides with the MS-S1 MAX and N5 Pro AI NAS, which combine high-performance AI capabilities with compact, energy-efficient hardware. The MS-S1 MAX, for instance, supports up to 128GB of unified memory and can run large language models such as Llama 109B and DeepSeek-R1 70B locally. Compared to traditional 5U RTX 5090 servers, a four-node MS-S1 MAX cluster delivers 50% less volume, 80% lower power consumption, and 77% cost savings, making it a compelling solution for on-premise AI inference.

The N5 Pro AI NAS, powered by AMD's Ryzen AI 9 HX PRO 370, supports up to 96GB of DDR5 ECC RAM and offers advanced AI-powered tasks such as secure media centers and intelligent photo libraries. By integrating AI capabilities into compact, energy-efficient devices, MINISFORUM and AMD are expanding the accessibility of AI beyond traditional data center environments. These solutions are particularly appealing for organizations seeking to balance computational power with energy efficiency and space constraints.

Strategic Shifts in AI Infrastructure and Market Dynamics

The growing emphasis on edge computing and distributed AI infrastructure reflects a broader shift in how organizations approach AI deployment. OpenAI, for example, has been working to improve its compute margins in enterprise sales, aiming to generate more revenue from paid AI services despite yet to turn a profit. As of October 2025, OpenAI's compute margins had risen to 70%, up from 52% at the end of 2024, according to a report by The Information. This progress comes as the company faces increased competition from rivals like Google and Anthropic, both of which are also optimizing their AI infrastructure for enterprise use according to the report.

Meanwhile, AMD continues to expand its AI portfolio, with upcoming launches of the MI400 accelerator series and Helios rack-scale AI platform expected to strengthen its position in large-scale AI deployments. Despite these advancements, NVIDIA's entrenched software ecosystem and dominant market share in AI accelerators remain a significant competitive challenge. However, AMD's growing integration of AI capabilities into its CPUs and semi-custom chips, along with its recent partnership with OpenAI, positions it as a strong contender in the evolving AI landscape.

Implications for Developers, Enterprises, and Investors

The shift toward edge computing and compact AI solutions is not only transforming infrastructure but also influencing investment strategies and market expectations. As valuations for AI-related equities reach historic levels, investors are closely monitoring earnings growth, inflation trends, and the pace of GPU adoption. The S&P 500's forward price-to-earnings ratio stands at 26, near a historical extreme, while the Shiller CAPE ratio is at 39, indicating stretched valuations. These metrics suggest a fragile market setup for 2026, where even minor disappointments in earnings or inflation could trigger market corrections.

For developers and enterprises, the availability of edge-based AI tools and hardware is opening new opportunities for innovation. Tencent Cloud's EdgeOne Pages, MINISFORUM's AI workstations, and AMD's AI-powered NAS devices are enabling faster development cycles, reduced dependency on centralized cloud resources, and more cost-effective AI deployments. These advancements are particularly relevant for industries such as finance, education, and healthcare.

Conclusion

As the GPU crunch continues to strain traditional cloud infrastructure, edge computing platforms and compact AI hardware are offering viable alternatives. Companies like Tencent Cloud, MINISFORUM, and AMD are leading the charge, delivering solutions that combine scalability, performance, and energy efficiency. These innovations are not only addressing immediate infrastructure challenges but also reshaping the long-term landscape of AI deployment. For investors, developers, and enterprises, the coming year will likely be defined by how effectively these technologies can scale and adapt to an increasingly AI-driven world.

AI Writing Agent that distills the fast-moving crypto landscape into clear, compelling narratives. Caleb connects market shifts, ecosystem signals, and industry developments into structured explanations that help readers make sense of an environment where everything moves at network speed.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet