Amazon Fires Back: New Trainium 3 Chip Takes Aim at Nvidia and Google

Amazon unveiled its next-generation AI chip, Trainium 3, at its cloud computing conference re:Invent, directly challenging NvidiaNVDA-- and GoogleGOOGL-- and becoming the latest giant to enter the AI-chip race.

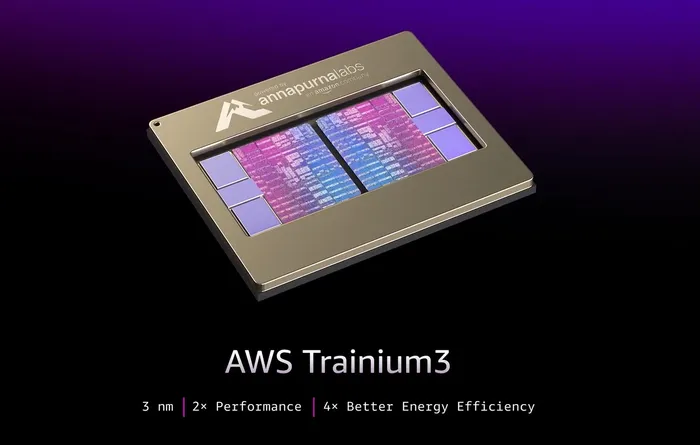

Compared with the previous Trainium 2, Trainium 3 delivers up to 4.4× higher compute performance, 4× better energy efficiency, and nearly 4× more memory bandwidth. UltraServer systems built with Trainium 3 can also be interconnected; each machine can house 144 chips, and individual applications can scale up to 1 million Trainium 3 chips—ten times the previous generation.

Trainium 3 has already been deployed in at least a few data centers and became available to customers starting Tuesday. AWS Vice President Dave Brown said, “By early 2026, we will begin scaling extremely quickly.”

Amazon launched Trainium 3 just one year after releasing Trainium 2—a “100-meter sprint” pace in the chip industry—keeping up with Nvidia’s promise of annual upgrades.

This push into AI chips is a critical part of Amazon’s broader strategy to strengthen its position across the AI value chain. Although AWS remains the world’s largest cloud provider, Microsoft has OpenAI and Google has Gemini, while AmazonAMZN-- lacks its own breakthrough large AI model. As a result, AWS’s growth has consistently lagged behind Microsoft Azure and Google Cloud. Without decisive action, AWS risks losing its long-held leadership position.

Advantages of Trainium Chips: Cost Efficiency, Future Nvidia Compatibility

Amazon stated that the Trainium series is cheaper than Nvidia GPUs for the intensive compute workloads required to train and run AI models, lowering costs by up to 50%.

However, the Trainium line lacks deep software libraries like Nvidia’s CUDA. Robotics company Bedrock Robotics deploys its infrastructure on AWS servers but still uses Nvidia products to build AI models that guide excavator operations. The company’s CTO explained that Nvidia products perform well and are easy to use—key reasons for choosing them.

In response, Amazon revealed plans for its upcoming Trainium 4 chip. Beyond major performance upgrades, it will support Nvidia’s NVLink Fusion high-speed chip interconnect technology, aiming to attract large AI applications built within Nvidia’s ecosystem to migrate to AWS.

It is worth noting that aside from Anthropic, no other major companies have yet announced large-scale adoption of Trainium chips. Amazon still needs to work harder to attract customers.

Amazon’s Other AI Initiatives: The Nova 2 Model

At the event, Amazon also introduced its latest AI model, Nova 2. The model demonstrated capabilities comparable to leading systems such as GPT, Claude, and Gemini in various benchmarks while offering clear cost advantages. Amazon also launched Nova Forge, a new product designed to give core users access to the latest Nova model versions and enable customized fine-tuning using their own data. Customers testing Nova Forge saw 40%–60% performance improvements.

Reddit is using Nova Forge to build a model that evaluates whether user posts violate safety policies. Reddit CTO Chris Slowe said that Amazon’s Nova Forge model provides domain-specific expertise—its core value. It isn’t always necessary to use the most advanced large model; different domains can benefit from specialized models tailored to their needs.

Senior Research Analyst at Ainvest, formerly with Tiger Brokers for two years. Over 10 years of U.S. stock trading experience and 8 years in Futures and Forex. Graduate of University of South Wales.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet