AI in Warfare Expands as Pentagon Partners with OpenAI

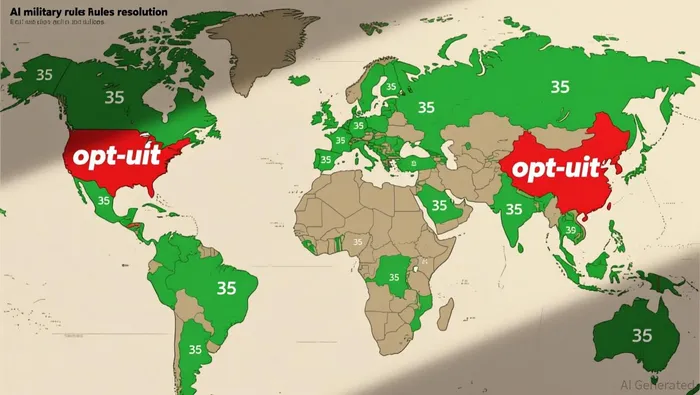

Thirty-five countries have backed AI military rules, though the U.S. and China have not according to reports.

The U.S. Navy is testing AI for maintenance, decision-making, and maritime awareness as documented.

OpenAI's ChatGPT is now accessible to 3 million U.S. military and civilian personnel via the Pentagon's genai.mil platform according to Reuters.

The international community is increasingly focused on regulating the military use of artificial intelligence, as evidenced by the adoption of a UN resolution on Dec. 24, 2024. The resolution, backed by thirty-five countries, aims to establish a framework for responsible AI use in defense contexts according to reports. The U.S. and China, however, have opted out of the agreement, indicating a potential divide in global AI governance.

In the U.S., the Department of Defense is actively integrating AI into its operations. The Pentagon has partnered with OpenAI to deploy a custom version of ChatGPT on its genai.mil platform, which serves 3 million military and civilian personnel. This move is part of a broader initiative to leverage AI in unclassified tasks and improve mission readiness as reported.

The U.S. Navy is also exploring AI for maintenance forecasting and decision support as part of its ongoing digital transformation according to AFCEA. These initiatives aim to enhance operational efficiency and adaptability in complex environments. Meanwhile, the Pentagon continues to seek expanded AI capabilities from companies like OpenAI, Google, and xAI.

What ethical concerns are emerging in military AI?

Anthropic, another major AI firm, has raised concerns about the use of its technology in autonomous weapons and domestic applications according to eWeek. Executives have communicated these reservations to military officials, emphasizing the need for clear boundaries. Anthropic's CEO, Dario Amodei, has highlighted the dual-edged nature of AI, noting both its transformative potential and the risks it poses as noted in The New York Times.

These concerns reflect a growing industry-wide awareness of the ethical and strategic implications of AI in military contexts. While AI can enhance decision-making and operational efficiency, its use in autonomous targeting remains a contentious issue.

What strategic implications could AI have for global security?

The adoption of AI in military operations has the potential to reshape global security dynamics. The U.S. Navy's efforts to integrate AI into maintenance and decision support systems indicate a shift toward more agile and data-driven operations according to AFCEA. The Pentagon's push for AI tools in unclassified networks is also part of a broader strategy to enhance national defense while maintaining data security according to Reuters.

However, the absence of the U.S. and China from the global AI military rules resolution raises questions about the long-term effectiveness of such agreements according to reports. The lack of universal participation may lead to a fragmented regulatory landscape, with some countries prioritizing AI advancement over ethical considerations.

What does this mean for investors in AI-related sectors?

Investors in AI companies should be aware of the evolving regulatory and ethical landscape. The Pentagon's partnerships with firms like OpenAI, Google, and xAI suggest sustained demand for AI tools in defense applications according to Reuters. This demand is likely to drive innovation and investment in AI security and governance solutions.

However, the ethical concerns raised by companies like Anthropic indicate that regulatory and reputational risks may also increase according to eWeek. Investors should monitor these developments closely, as they could influence the long-term growth and sustainability of AI-focused firms in the defense sector.

Blending traditional trading wisdom with cutting-edge cryptocurrency insights.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet