AI Models Struggle With Understanding Word No Study Finds

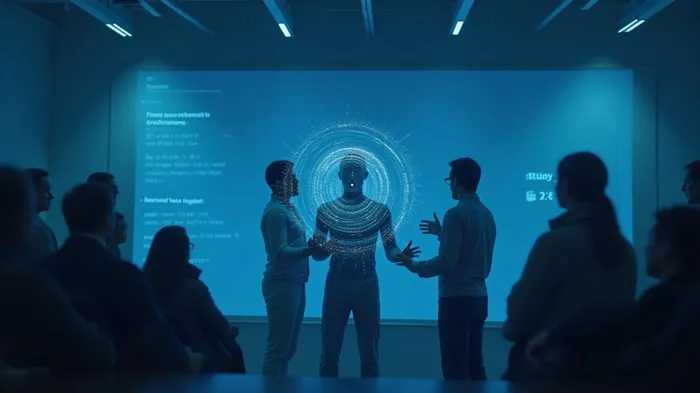

Artificial Intelligence (AI) has made significant strides in various fields, from diagnosing diseases to composing poetry and even driving cars. However, a recent study led by MIT PhD student Kumail Alhamoud, in collaboration with OpenAI and the University of Oxford, reveals a critical flaw: AI struggles to understand the word "no." This limitation poses significant risks, particularly in high-stakes environments like healthcare, where misinterpreting negation can lead to serious harm.

The study highlights that current AI models, including ChatGPT, Gemini, and Llama, often fail to process negative statements correctly. These models tend to default to positive associations, interpreting phrases like "not good" as somewhat positive due to their pattern recognition training. This issue is not merely a lack of data but stems from how AI is trained. Most large language models are designed to recognize patterns rather than reason logically, leading to subtle yet dangerous mistakes.

Franklin Delehelle, lead research engineer at a zero-knowledge infrastructure company, explains that AI models are excellent at generating responses similar to their training data but struggle with genuinely new or outside-of-training-data responses. If the training data lacks strong examples of negation, the model may fail to generate accurate negative responses. This limitation is particularly evident in vision-language models, which show a strong bias toward affirming statements and frequently fail to distinguish between positive and negative captions.

The researchers suggest that synthetic negation data could offer a promising path toward more reliable models. However, challenges remain, especially with fine-grained negation differences. Despite ongoing progress in reasoning, many AI systems still struggle with human-like reasoning, particularly in open-ended problems or situations requiring deeper understanding or "common sense."

Kian Katanforoosh, adjunct professor of Deep Learning at Stanford University, points out that the challenge with negation stems from a fundamental flaw in how language models operate. Negation flips the meaning of a sentence, but most language models predict what sounds likely based on patterns rather than reasoning through logic. This makes them prone to missing the point when negation is involved. Katanforoosh warns that the inability to interpret negation accurately can have serious real-world consequences, especially in legal, medical, or HR applications.

Scaling up training data is not the solution, according to Katanforoosh. The key lies in developing models that can handle logic, not just language. This involves bridging statistical learning with structured thinking, a frontierULCC-- that the AI community is currently exploring. The study underscores the need for AI models to be taught to reason logically rather than just mimic language, ensuring more reliable and accurate responses in critical domains.

Quickly understand the history and background of various well-known coins

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet