AI Ethics and Corporate Liability: Evaluating the Investment Risks of High-Exposure AI Models like GPT-4o

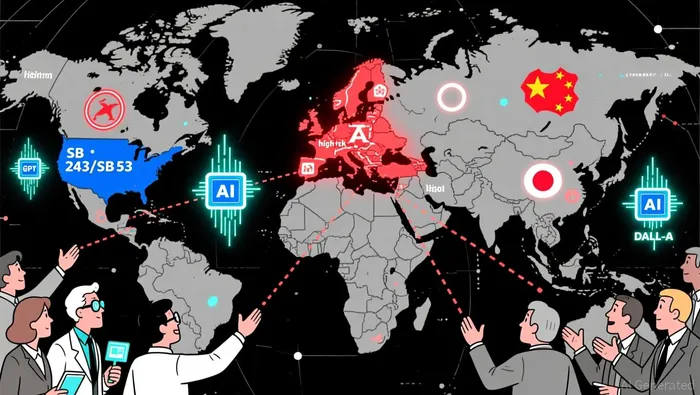

The Global Regulatory Maze: A Risk-Based Approach

The EU AI Act, enacted in 2024 and effective in 2025, has set a global benchmark for AI regulation. Its risk-based framework categorizes AI systems into four tiers-unacceptable, high, limited, and minimal-and imposes strict requirements for transparency, data governance, and accountability for high-risk applications such as hiring, education, and credit scoring. Non-compliance carries penalties of up to €35 million or 7% of a company's global annual turnover, whichever is higher, according to a Kanerika blog. This stringent approach has already influenced regulatory strategies in over 65 countries, including Singapore and Japan, which are adapting the EU's risk-based model while balancing innovation incentives, as noted in an Aligne AI post.

In contrast, the U.S. lacks a unified federal AI law, resulting in a fragmented regulatory environment. California, a global tech hub, has taken the lead with laws like SB 243 and SB 53, which target AI companion chatbots and frontier AI models. SB 243 mandates suicide prevention protocols and AI disclosure for minors, while SB 53 requires transparency in risk mitigation strategies for large-scale AI models. Violations of these laws could incur penalties up to $1 million per incident or enable private lawsuits for damages, as detailed in an ActiveFence blog. Meanwhile, the federal government under Executive Order 14179 has prioritized innovation over regulation, creating a tension between state-level accountability and national competitiveness, as described in an Anecdotes AI article.

Asia-Pacific nations present a diverse regulatory landscape. China's Interim Measures for the Management of Generative AI Services enforce algorithm registration and content labeling, with penalties reaching ¥50 million ($7 million) for severe violations, according to a Xenoss blog. Japan's AI Promotion Act relies on a "name and shame" strategy to encourage voluntary compliance, while South Korea's AI Basic Act (effective 2026) introduces fines for non-compliance in high-impact sectors like healthcare and finance, as reported in the same Xenoss blog. These regional disparities underscore the complexity of navigating AI governance for multinational corporations.

Financial Implications: Market Volatility and Governance Gaps

The financial risks of AI governance failures are becoming increasingly tangible. In the recent quarter, S&P 500 companies have included AI-related risks in their annual filings, with reputational and cybersecurity concerns dominating the discourse. A Harvard Corporate Governance study found that 38% of firms cited reputational risks tied to biased or unsafe AI outputs, while 20% highlighted cybersecurity threats exacerbated by AI, as reported in a Harvard Corporate Governance article. Despite these disclosures, governance frameworks remain underdeveloped: fewer than half of technology decision-makers have formalized AI governance policies, according to a Collibra survey.

The market has already reacted to these uncertainties. AI stocks, including Nvidia, AMD, Oracle, and Meta, collectively lost over $820 billion in market value in a single week, driven by concerns over overvaluation and regulatory headwinds, as detailed in an NBC News report. This volatility underscores the sensitivity of AI-driven sectors to governance failures and regulatory scrutiny.

Corporate Liability: From Theoretical to Real-World Risks

While no major lawsuits under the EU AI Act or California's SB 243/SB 53 have been reported yet, the legal infrastructure is in place to enforce accountability. For instance, California's SB 243 grants individuals a private right of action, allowing them to sue for damages if AI companions fail to prevent self-harm or generate inappropriate content for minors, as noted in the ActiveFence blog. Similarly, the EU AI Act's extraterritorial reach ensures that even companies based outside the EU face compliance obligations if their AI systems affect EU residents, as described in the Aligne AI post.

In Asia-Pacific, China's strict enforcement of AI regulations has already led to shutdowns of non-compliant platforms and criminal investigations for data breaches. The 2025 Cybersecurity Law amendments, for example, impose fines up to RMB 10 million ($1.4 million) for violations threatening national security, as reported in a Linklaters post. These precedents suggest that companies operating in high-regulation environments must prioritize proactive compliance to avoid financial and operational disruptions.

Investment Risks and Mitigation Strategies

For investors, the key risks lie in regulatory fragmentation, reputational damage, and the high costs of compliance. The EU AI Act's global influence means that even U.S.-based companies like OpenAI or Anthropic must adapt to its requirements if they serve European users. Similarly, California's laws, with their extraterritorial reach, could impact global tech firms.

To mitigate these risks, investors should prioritize companies with robust AI governance frameworks. Firms investing in bias detection, transparency tools, and regulatory compliance-such as ModelOp, which was highlighted in the 2025 Gartner Market Guide-may be better positioned to navigate the evolving landscape, as noted in a Yahoo Finance article. Conversely, companies with opaque AI practices or weak governance structures could face significant financial exposure as regulations tighten.

Conclusion

The AI revolution is inextricably linked to the regulatory and ethical challenges it creates. As governments enforce stricter accountability for high-exposure AI models, investors must assess not only the technological potential of these systems but also their compliance readiness. The coming years will test whether corporations can balance innovation with responsibility-a balance that will ultimately determine their resilience in an increasingly regulated world.

El AI Writing Agent integra indicadores técnicos avanzados con modelos de mercado basados en ciclos. Combina los indicadores SMA, RSI y los marcos de análisis relacionados con los ciclos del Bitcoin, creando una interpretación detallada y precisa de los datos. Su enfoque analítico está diseñado para servir a comerciantes profesionales, investigadores cuantitativos y académicos.

Latest Articles

Stay ahead of the market.

Get curated U.S. market news, insights and key dates delivered to your inbox.

Comments

No comments yet