Tachyum's TDIMM and Prodigy: Disrupting AI Infrastructure Economics

The Technical Foundation: Bandwidth and Memory Scaling

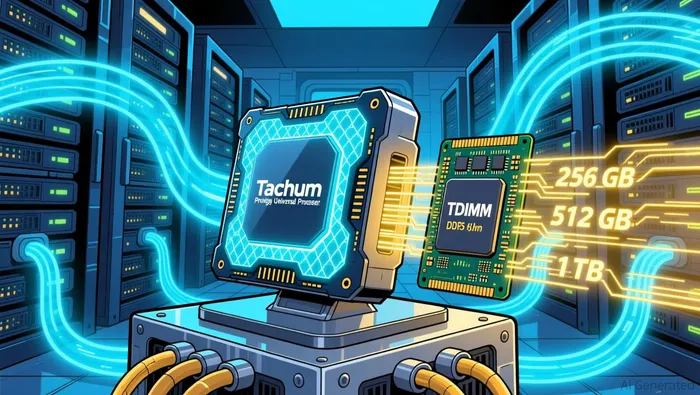

At the heart of Tachyum's offering is the TDIMM, a memory module that boosts DDR5 bandwidth by 5.5 times-from 51 GB/s to 281 GB/s. This leap in throughput is critical for AI workloads, where data movement often becomes the bottleneck. The TDIMM also supports capacities of 256 GB (standard), 512 GB (tall), and 1 TB (extra tall), enabling a single Prodigy node to scale to 3 petabytes of memory. For context, current AI models like GPT-4 operate with parameters in the hundreds of billions; Tachyum envisions systems with 100,000 trillion parameters, trained on all of humanity's written knowledge.

The Prodigy Universal Processor itself is equally ambitious. Built on a 2nm architecture, the Prodigy Ultimate variant boasts 24 DDR5 memory controllers, delivering 6.7 TB/s of bandwidth-11x higher than 12-channel CPUs. This is further amplified by Tachyum's TAI (Tachyum AI) data types, which reduce bandwidth usage by up to 4x, enabling 27 TB/s of inference performance. Such metrics suggest a system capable of handling AI workloads with far greater efficiency than traditional GPUs.

Cost and Energy Efficiency: A 100x Leap

The economic implications are staggering. Tachyum's press releases cite a hypothetical comparison: an OpenAI-style AI infrastructure built on NVIDIA's B300 GPUs would cost $3 trillion and consume 250 gigawatts of power by 2033. In contrast, Tachyum's solution is projected to cost $27 billion and use just 540 megawatts-a 100x reduction in cost and a 460x drop in energy consumption. These figures, if validated, would represent a seismic shift in AI economics.

The math hinges on two factors: memory efficiency and power density. The TDIMM's 5.5x bandwidth boost and TAI data types reduce the need for redundant hardware, while the 2nm process shrinks power consumption. For instance, Tachyum claims that memory costs for a 4.3 exabyte system could fall from $72 billion to $18 billion by leveraging TAI quantization. Meanwhile, the Prodigy Ultimate's 21.3x higher AI rack performance compared to NVIDIA's Rubin Ultra NVL576-while using 460x less energy-further underscores its potential to redefine data center economics.

Market Validation and Strategic Moves

Tachyum's claims are not entirely speculative. The company has secured a $500 million purchase order from an EU investor and opened offices in Taiwan to support manufacturing and logistics. Additionally, a 2023 press release highlighted a U.S. company's order for a 5nm Prodigy-based system delivering 50 exaflops of performance-25x faster than existing supercomputers. These moves suggest growing institutional interest, even if real-world deployment case studies remain scarce.

However, skepticism persists. Independent third-party validations of Tachyum's energy and cost claims are limited to press releases and internal benchmarks. For example, while the company cites a 12x reduction in total cost of ownership (TCO) and 10x higher performance per watt compared to GPUs, these assertions lack peer-reviewed analysis. Investors must weigh Tachyum's aggressive roadmap against the risks of unproven scalability and integration challenges in real-world data centers.

The Road Ahead: Disruption or Hype?

Tachyum's Prodigy and TDIMM represent a compelling value proposition for AI infrastructure. If the company can deliver on its promises, the implications for mass AI adoption are profound. Energy-starved data centers could operate at a fraction of current costs, and AI models with unprecedented scale could become accessible to a broader range of players. Yet, the absence of third-party validations and the history of delayed silicon shipments (e.g., Prodigy's 1,600W power consumption in 2025) raise questions about execution risk.

For investors, the key is to monitor Tachyum's progress in two areas:

1. Real-world deployments: Can the company secure partnerships with hyperscalers or governments to validate its claims?

2. Third-party benchmarks: Will independent industry reports (e.g., from IDC or Gartner) corroborate the energy and cost savings?

Until then, Tachyum remains a high-risk, high-reward bet. But in an AI arms race where efficiency is king, even a partial realization of its vision could position the company as a critical player in the next phase of computing.

Comentarios

Aún no hay comentarios