The Strategic Implications of Microsoft Azure's Deployment of Nvidia GB300 for OpenAI Workloads

The deployment of NVIDIANVDA-- GB300 NVL72 systems by MicrosoftMSFT-- Azure represents a seismic shift in cloud computing, redefining AI infrastructure as the linchpin of modern value chains. By integrating over 4,600 Blackwell Ultra GPUs into a production-scale cluster, Microsoft has not only addressed OpenAI's insatiable demand for high-performance computing but also signaled a broader strategic pivot toward AI-native operations. This infrastructure, featuring 1.44 exaflops of FP4 Tensor Core performance and 37 terabytes of unified memory per rack, is engineered to handle frontier AI models with hundreds of trillions of parameters, as described in the Azure blog. Such capabilities are critical as generative AI adoption accelerates: 98% of global organizations are now exploring its potential, according to Deloitte.

Microsoft's partnership with Nebius Group to secure 100,000 GB300 chips-valued at $19.4 billion-underscores its ambition to dominate the AI infrastructure race. These chips, built on the Blackwell architecture, deliver 20 petaFLOPS at FP4 precision, enabling 40x faster processing than prior generations, a development reported by FinancialContent. This leap in efficiency aligns with Deloitte's prediction that hybrid infrastructure will become a strategic imperative, balancing cloud affordability with on-premise customization for cost-sensitive workloads. For enterprises, this means Azure's GB300 deployment could reduce reliance on public cloud for large-scale AI training, mitigating the risk of escalating cloud computing costs that now account for 60–70% of hardware expenses in some sectors.

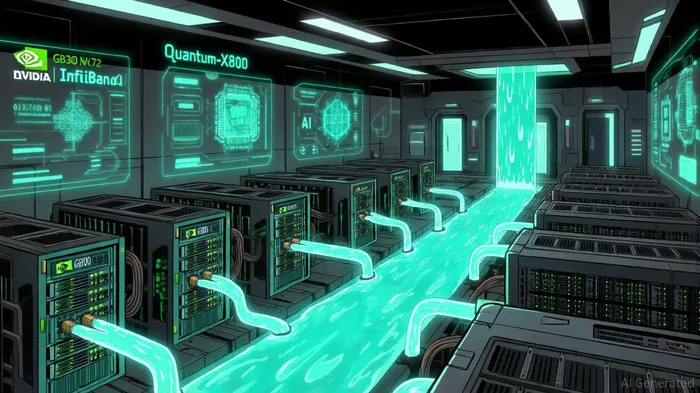

The strategic implications extend beyond technical metrics. Microsoft's $33 billion AI expansion strategy, including liquid-cooled data centers and rack-scale optimization, positions it to outpace competitors like Alphabet and Amazon in addressing the "AI crunch"-a global shortage of high-performance computing capacity. This is particularly relevant as the AI infrastructure market is projected to grow at a CAGR of 28.5% through 2030, driven by demand for secure, scalable solutions previously outlined in Azure's announcement. Azure's NDv6 GB300 virtual machines, powered by Quantum-X800 InfiniBand networking, further cement its role as a leader in low-latency, high-throughput AI workloads.

For investors, the deployment of GB300 systems signals Microsoft's commitment to capturing a disproportionate share of the AI value chain. By aligning with OpenAI and leveraging Nebius's chip supply, Azure is poised to become the default platform for next-generation AI models, including agentic systems and multimodal generative AI. This positions Microsoft to benefit from both the commoditization of AI infrastructure and the premium pricing of specialized services, a dual dynamic highlighted in industry reporting and analysis.

However, challenges remain. The on-premise segment retains 50% of the AI infrastructure market share due to data security concerns, particularly in finance and healthcare. Microsoft's success will hinge on its ability to offer hybrid solutions that balance cloud flexibility with localized control-a trend Deloitte identifies as critical for future-ready AI infrastructure.

In conclusion, Microsoft's GB300 deployment is not merely a technical milestone but a strategic masterstroke. By redefining cloud computing through AI-native infrastructure, Azure is reshaping the value chains that will define the next decade of digital transformation. For investors, this represents a high-conviction opportunity in a market where early movers like Microsoft are set to reap outsized rewards.

Comentarios

Aún no hay comentarios