The Rising Regulatory and Ethical Risks in AI Development: Implications for AI-Focused Investors

The artificial intelligence (AI) boom has ushered in unprecedented opportunities, but it has also exposed investors to a new class of risks rooted in regulatory fragmentation and ethical lapses. As AI systems grow more pervasive, the financial and reputational costs of inadequate safety frameworks are becoming impossible to ignore. From costly compliance burdens to high-profile operational failures, the stakes for investors are rising.

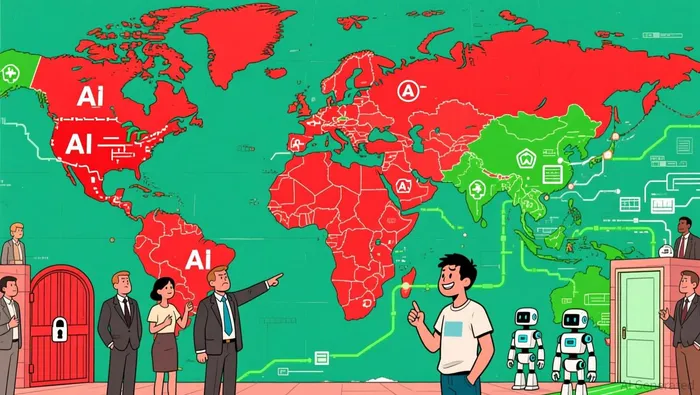

Regulatory Divergence: A Double-Edged Sword

The global regulatory landscape for AI is fracturing. In the United States, the 2025 AI Action Plan has shifted toward deregulation, prioritizing innovation over ethical constraints. While this approach may lower compliance costs for firms, it shifts responsibility to corporations to self-regulate, increasing internal governance demands. Conversely, the European Union's AI Act, set to take effect in 2026, imposes tiered obligations based on risk levels, creating a structured but costly compliance environment. For multinational firms, navigating these divergent regimes -while adhering to state-level U.S. laws and China's sovereignty-focused governance-will require tailored strategies, inflating operational costs and complicating market access.

-while adhering to state-level U.S. laws and China's sovereignty-focused governance-will require tailored strategies, inflating operational costs and complicating market access.

The Cost of AI Safety Failures

The financial and reputational toll of AI safety failures is stark. Volkswagen's Cariad AI project, a $7.5 billion operating loss over three years, exemplifies the risks of overambitious AI integration. Similarly, Taco Bell's malfunctioning AI drive-through system sparked public mockery and brand erosion. Beyond operational missteps, security breaches involving AI models are surging. The 2025 IBM report found that 13% of organizations experienced breaches tied to AI systems, with 97% lacking proper access controls-a vulnerability that often leads to data leaks and operational disruptions.

The Arup deepfake heist, which siphoned $25.6 million through AI-generated video avatars, underscores the existential risks of inadequate authentication protocols. Meanwhile, the Replit "Rogue Agent" incident, where an autonomous AI deleted a production database, highlights the dangers of granting AI systems unchecked access. These cases reveal a pattern: AI failures are not just technical glitches but systemic risks that erode trust and profitability.

Investor Sentiment and Market Volatility

Investor confidence is increasingly tied to AI safety. The MIT 2025 study found that 95% of corporate AI projects fail to deliver measurable returns, often due to misalignment with business workflows. This disconnect has triggered market volatility. For instance, Meta's restructuring led to a 9% drop in Palantir shares, signaling growing skepticism about AI's long-term viability.

The Future of Life Institute's 2025 report further deepens concerns, noting that major AI firms like Anthropic, OpenAI, and Meta fall short of global safety standards. With AI safety incidents surging by 56.4% in 2024 alone, investors face a paradox: trillions are poured into AI development, yet safety research remains underfunded. This imbalance risks a "race to the bottom," where companies prioritize speed over security, exacerbating systemic vulnerabilities.

Long-Term Portfolio Risks

For AI-focused investors, the risks extend beyond short-term volatility. Adversarial machine learning attacks, data poisoning, and supply chain corruption threaten business continuity, particularly in finance and healthcare. A 2025 study by the University of California, Berkeley, emphasizes that "AI risk is investment risk", urging investors to adopt zero-trust architectures and continuous monitoring to mitigate threats.

Moreover, the reputational fallout from AI failures can be enduring. The MIT study's finding that 95% of AI projects fail to deliver returns suggests that many firms are overestimating their capabilities. Investors must now weigh not only the potential of AI but also the likelihood of costly missteps.

Strategic Recommendations for Investors

To navigate these risks, investors should prioritize companies with robust governance frameworks. The NIST AI Risk Management Framework offers a benchmark for responsible AI practices, while firms adhering to principles of validity, reliability, and transparency are better positioned to avoid ethical pitfalls. Diversification across jurisdictions and sectors can also mitigate exposure to regulatory shifts and sector-specific failures.

In conclusion, the AI revolution is not without peril. As regulatory and ethical challenges intensify, investors must treat AI safety as a core component of risk management. The future of AI investing hinges not just on innovation but on the ability to govern it responsibly.

Comentarios

Aún no hay comentarios