Nvidia's Strategic Position in the AI Boom: A Deep Dive into AI Hardware Demand and Risks from OpenAI's Funding Model

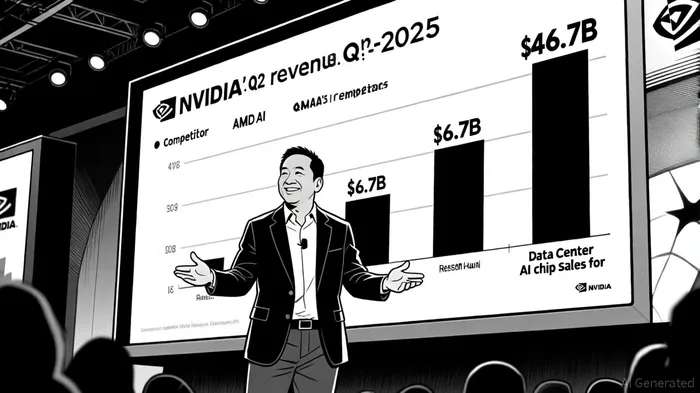

Nvidia's dominance in the AI hardware market has reached unprecedented levels, with its Q2 2025 revenue surging to $46.7 billion—a 56% year-over-year increase—driven by the Data Center segment, which accounted for 88% of total sales[1]. This segment's success is largely attributed to the Blackwell GPU, which generated $27 billion in sales alone[3]. According to a report by The Silicon Review, Nvidia's market share in AI accelerator chips ranges between 70% and 95%, cementing its position as the de facto standard for AI infrastructure[4]. However, this dominance is not without structural risks, particularly as the company's $100 billion investment in OpenAI has sparked antitrust concerns and raised questions about long-term sustainability[5].

Historical backtesting of Nvidia's performance following earnings beats reveals a mixed picture. A strategy of buying NVDANVDA-- after earnings surprises (proxied by sequential EPS growth >0) from 2022 to 2025 yielded a cumulative return of 16.6% but faced a maximum drawdown of 31.7% and an annualized return of 6.0%. These results highlight the volatility inherent in earnings-driven strategies, with a Sharpe ratio of 0.32 indicating relatively low risk-adjusted returns. While Nvidia's recent Q2 2025 beat aligns with its historical pattern of strong earnings performance, investors should remain cautious about the high drawdowns and inconsistent returns associated with such timing.

Market Leadership and Revenue Growth

Nvidia's strategic position in the AI boom is underpinned by its technological edge and ecosystem lock-in. The company's CUDA software platform, coupled with cutting-edge architectures like the H100 and Blackwell GPUs, has created high switching costs for competitors[4]. In fiscal year 2025, the Data Center business alone generated $115.2 billion in revenue, reflecting the insatiable demand for AI computing power[5]. This growth is further amplified by the global AI hardware market's projected expansion, which is expected to grow at a 22.43% CAGR from 2025 to 2034, reaching $210.5 billion by 2034[6].

Yet, challenges persist. Geopolitical tensions have limited Nvidia's access to the Chinese market, where it recorded zero sales of its H20 AI chip due to export restrictions[4]. Meanwhile, competitors like AMD and Intel are closing the gap. AMD's MI300X, optimized for inference workloads, has gained traction in open-source and academic environments, generating $6.7 billion in AI accelerator revenue in 2025[5]. While these figures pale in comparison to Nvidia's scale, they signal a market that is becoming increasingly competitive.

OpenAI's Funding Model: A Double-Edged Sword

Nvidia's $100 billion investment in OpenAI, announced in late 2025, has redefined the AI landscape but introduced significant structural risks. The deal, which includes a $10 billion equity stake and $90 billion in infrastructure funding, is structured around a lease model where OpenAI accesses Nvidia's Vera Rubin GPUs instead of purchasing them outright[7]. This circular financing arrangement—where capital is funneled into infrastructure that generates revenue for the chipmaker—has drawn sharp criticism from antitrust experts.

According to a Reuters analysis, the lease model reduces OpenAI's upfront costs by 10–15% but shifts financial risks to NvidiaNVDA--, including depreciation and residual value uncertainty[1]. Legal scholars like Andre Barlow warn that the partnership could entrench Nvidia's dominance, creating barriers for smaller AI startups and stifling innovation[5]. Furthermore, the U.S. Department of Justice has emphasized the need to prevent exclusionary practices in the AI supply chain, cautioning that such deals could restrict access to critical inputs like GPUs[6].

The financial implications for OpenAI are equally concerning. Projections indicate the company could spend up to $450 billion on rented GPU capacity by 2030, raising questions about its long-term financial stability[7]. While the lease model offers flexibility, it also deepens OpenAI's dependency on Nvidia's ecosystem, potentially limiting its ability to pivot to alternative technologies or suppliers.

Long-Term Growth vs. Structural Risks

Nvidia's strategic position in the AI boom appears robust in the short term, with its Data Center segment poised to benefit from the global shift toward AI-driven infrastructure. However, the OpenAI partnership introduces regulatory and competitive risks that could undermine its long-term growth. Antitrust scrutiny is likely to intensify as regulators grapple with the implications of such large-scale, vertically integrated deals. Additionally, the rise of alternative architectures—such as AMD's Instinct series and Intel's Gaudi chips—could erode Nvidia's market share if they gain broader adoption[5].

For investors, the key question is whether Nvidia can balance its dominance in AI hardware with the need to foster a competitive ecosystem. While the company's technological leadership and CUDA ecosystem provide a moat, overreliance on partnerships like the one with OpenAI could backfire if regulatory or market dynamics shift.

Conclusion

Nvidia's strategic position in the AI boom is a testament to its innovation and market foresight. However, the structural risks associated with its funding model for OpenAI—and the broader concentration of power in the AI supply chain—cannot be ignored. As the AI hardware market evolves, investors must weigh Nvidia's unparalleled growth potential against the regulatory, financial, and competitive challenges that lie ahead.

Comentarios

Aún no hay comentarios